Quantitative Entry Normalisation for 367352100, 665290618, 519921956, 466291111, 2310989863, 8081681615

Quantitative entry normalisation serves as a pivotal step in standardising diverse numerical identifiers, such as 367352100 and 665290618. By employing methods like min-max scaling and z-score normalization, these varying values can be transformed onto a uniform scale. This process not only enhances data integrity but also paves the way for more accurate analyses. The implications of such normalisation are significant, prompting a closer examination of its application and outcomes across the provided identifiers.

Understanding Quantitative Entry Normalisation

Quantitative Entry Normalisation serves as a pivotal technique in data processing, aimed at standardising numerical inputs across diverse datasets.

This method facilitates data transformation by ensuring that varying scales do not distort analytical outcomes. Numeric standardization enables the comparison of disparate data points, promoting clarity and enhancing the integrity of subsequent analyses.

Ultimately, it empowers users to extract meaningful insights from standardized information.

The Process of Normalising Numeric Data

Normalising numeric data involves a systematic approach to transforming values into a consistent scale, crucial for accurate analysis.

This process employs various data preprocessing techniques and statistical standardization methods, such as min-max scaling and z-score normalization.

Benefits of Applying Normalisation Techniques

When datasets are subjected to normalization techniques, researchers and analysts often observe improved comparability across variables, which is essential for drawing meaningful insights.

This process enhances data accuracy, ensuring that variations are not misinterpreted due to scale differences. Consequently, statistical significance becomes more apparent, allowing for robust conclusions and informed decision-making, thereby fostering an environment conducive to analytical freedom and exploration.

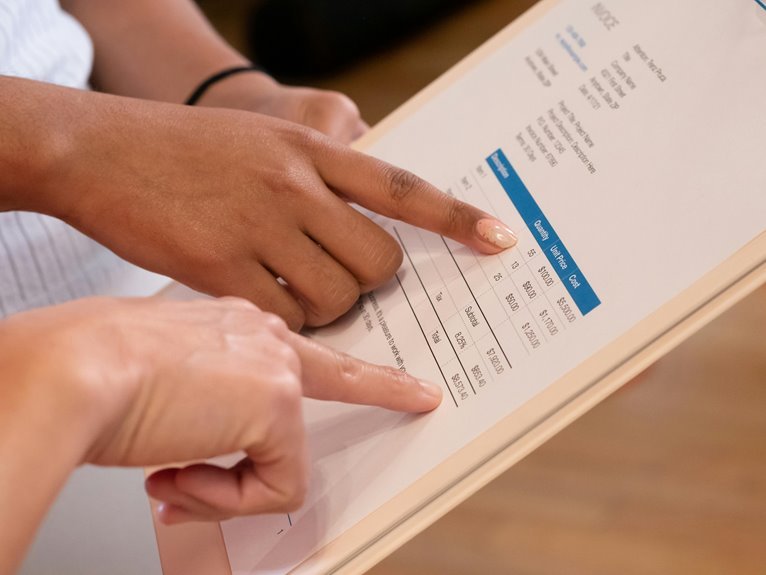

Case Studies: Analyzing the Sample Identifiers

Three distinct case studies illustrate the significance of analyzing sample identifiers in the context of normalization.

Through meticulous sample analysis, researchers can identify patterns that emerge from identifier comparison, revealing discrepancies and opportunities for enhancement.

These case studies exemplify how systematic examination of identifiers leads to improved data integrity, fostering a more robust foundation for decision-making and promoting the freedom to innovate within diverse analytical frameworks.

Conclusion

In conclusion, the normalization of identifiers such as 367352100 and 665290618 transforms disparate numerical landscapes into harmonious tapestries of data. By employing techniques like min-max scaling and z-score normalization, the once chaotic numbers become navigable pathways, illuminating insights that might otherwise remain obscured. This meticulous process not only enhances the clarity of comparisons but also fortifies the foundations of decision-making, allowing organizations to traverse the vast terrain of data with confidence and precision.